Coming across website and software crashes or delays can result in frustration for the end user and end up disappointing them. Organizations launching a new product can prevent such issues by investing in Software Performance Testing – a non-functional type of testing. This testing helps ensure the software application is functioning well under a lot of workload.

Here in this blog, we will discuss the following points related to Performance Testing:

- Meaning of performance testing

- Software performance testing processes

- Different types of software performance testing

- Common software performance testing tools

- Performance testing with Apache

What is Performance Testing in Software Testing?

A non-functional testing type, performance testing helps analyze the behavior of an application in numerous situations. An application may work flawlessly with a few users per day but might run into performance issues when encountered with a large concurrent number of users, which results in load or stress on the system.

Also known as Perf testing, Performance testing ensures that the application’s speed, response time, stability, and reliability are as required. It also builds confidence that the application is up to the mark and results in better execution and less maintenance.

Benefits and Objectives of Software Performance Testing

Evaluate system performance

The primary objective of performance testing is to evaluate the performance of a system under specific conditions, such as heavy load or extended usage. This helps determine if the system meets performance requirements and can handle the expected workload.

Improve User Experience

An important benefit of performance testing is that it helps identify bottlenecks and performance issues that may affect the user experience. The overall user experience can be improved by resolving these issues, and customer satisfaction can increase.

Ensure Scalability

Performance testing helps determine the scalability of a system, i.e., its ability to handle increased workloads. This is important for systems expected to grow or handle large amounts of data over time.

Detect performance-related bugs

Performance testing can help identify performance-related bugs and defects, such as memory leaks and slowdowns. This helps ensure that the system is stable and performs optimally.

Validate system capacity

Performance testing helps validate the capacity of a system, i.e., the maximum load it can handle before performance degradation occurs. This helps determine if the system can meet the expected demand and if additional resources are needed.

Identify performance optimization opportunities

Software performance testing results can provide valuable insights into areas where performance can be optimized. This can improve the efficiency of the system and reduce operational costs.

Meet Compliance Requirements

In some cases, performance testing is required to meet regulatory or industry compliance requirements. This helps ensure that the system meets the necessary standards and requirements.

Performance Testing Attributes

The following attributes are considered when testing a software’s performance:

- Speed: whether the application responds quickly

- Scalability: the amount of load the application can bear at a given time

- Stability: how well the application remains stable when the workload is increased

- Reliability: if the application can work properly under certain conditions every time or not

Performance Testing Metrics

To evaluate the performance of a system, key metrics need to be defined for creating reports that explain the results to not just testers but all stakeholders as a whole.

Some standard performance testing metrics include:

- Response Time: The time it takes for the system to respond to a request or complete a task.

- Throughput: The amount of data or the number of requests the system processes in a given time period.

- Latency: The amount of time it takes for a request to be processed by the system, from the time it is received until a response is sent.

- Resource Utilization: The number of resources, such as CPU, memory, and disk space, used by the system during performance testing.

- Error rate: The number of errors or failures during performance testing. These are expressed as a percentage of the total number of requests processed.

- Scalability: The ability of the system to handle increased workloads, usually measured as a percentage increase in workload and the corresponding increase in response time.

- Memory leaks: The amount of memory consumed by the system over time, indicating possible memory leaks that may lead to performance degradation.

- Concurrent Users: The number of simultaneous users accessing the system during performance testing.

- Transaction per second: The number of transactions the system processes in a second.

- Bandwidth: The amount of data that can be transmitted over a network connection in a given amount of time.

Performance Testing Process

Different methodologies exist when it comes to the implementation of software performance testing. However, most organizations follow a generic framework comprising a seven-step process.

- Identifying the testing environment: by identifying the tools available, network configurations, hardware, and software, the testing team can design the test. This also helps in the early identification of testing challenges.

- Identifying the performance metrics, such as the response time to see what success criteria will be set for the performance testing.

- Planning and designing performance tests

- Configuring the test environment

- Implementing the test design

- Executing the tests

- Analyzing, reporting, and retesting

Types of Performance Testing in Software Engineering

There are several types of performance testing, such as

Load testing

A real-world load is simulated to see how well the system performs under stress. With load testing, one can identify bottlenecks and check how many transactions or users the system can handle at a given period.

Stress testing

Another type of load testing, stress testing, involves testing the ability of a system to handle loads above their normal usage levels. This helps in determining what issues can arise under severe load conditions and what is the breaking point of the system.

Endurance testing

Also known as soak testing, endurance testing evaluates the behavior of a system over an extended period. The goal is to determine if the system can maintain responsiveness and stability under continuous usage.

During endurance testing, the system is subjected to a heavy load for an extended period, typically several hours to several days. Hence, testers can identify any performance issues that may occur over time, such as memory leaks, slowdowns, and failures. The results of endurance testing can provide valuable information for continuous improvement.

Systems that are expected to run continuously for long periods, such as online transaction processing systems, data centers, and critical infrastructure, are usually checked with endurance testing.

Spike testing

As the name suggests, spike testing focuses on checking how capable the system is of handling sudden traffic spikes. This type of testing helps identify what issues can occur if the system suddenly has to handle a high number of requests.

Scalability testing

When it comes to scalability testing, you increase the user load or data volume gradually instead of all at once. You may keep the workload at the same level but changes the resources, such as the CPU and memory.

Scalability testing is further divided into upward scalability testing and downward scalability testing. In upward scalability testing, you try to find the maximum capacity of an application by increasing the number of users in a particular sequence.

In downward scalability testing, the resources such as memory, CPU, disk space, or network bandwidth are reduced to check how well the system can behave without the optimum level of resources. Usually performed with other types of testing, such as stress and load testing, downward scalability testing provides insights into the system’s response in unexpected events such as hardware failure or resource degradation.

Volume Testing

Also known as flood testing, volume testing involves flooding the system with data to determine how well it performs when projected with large amounts of data.

Most-Recommended Software Performance Testing Tools

From commercial products to open-source software, one can choose from a myriad of options, depending on their performance testing tools, project constraints, budget, and technology stack. The most common options are as follows.

- Apache JMeter: An open-source tool with load-testing options for web applications.

- Gatling: An open-source load testing tool supporting HTTP, JMS, JDBC, and WebSockets protocols.

- LoadRunner: A commercial tool for load testing with support for multiple protocols, technologies, and testing across various locations.

- BlazeMeter: A cloud-based load testing tool with free and paid versions. It allows users to test the performance of their websites and applications with real-life traffic simulations.

- AppDynamics: A commercial tool that provides real-time performance monitoring and diagnosis of applications.

- JUnit: A unit testing framework for Java that can also be used for performance testing.

- K6: An open-source tool by Grafana Labs commonly used in load testing.

- Locust: An open-source tool used to create and design load tests in Python.

While they all require scripting, Apache JMeter and BlazeMeter offer some codeless features, making them partially codeless testing tools.

Software Performance Testing with JMeter

One of the most commonly used tools utilized in software performance testing is Apache JMeter, a GUI and Java-based open-source software that helps users carry out multiple performance testing types, including load testing and spike testing. It is a popular tool used by many QA services too.

Setting up JMeter is not a challenging task. A prerequisite is already having JAVA installed and set up as the environment variable. The next step is downloading the zip file and executing the bat/sh file. If using Mac, it is even simpler to install with brew using the command “brew install jmeter” and launch using “open /usr/local/bin/jmeter” via terminal.

There are some terms one should be familiar with before using JMeter. Familiarity with these will help a beginner understand performance testing terminologies.

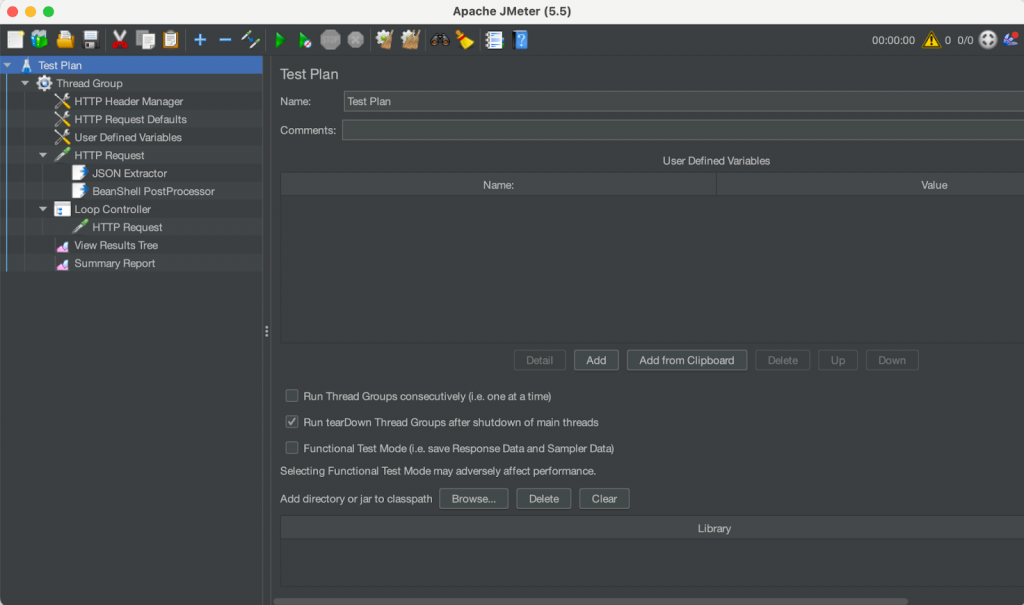

Test Plan

This refers to the main container, which contains all the network requests, extractors, headers, assertions, validations, etc. Everything that needs or is needed for performance testing should be inside it.

Threads: Thread Group

This is a set of network requests, or threads, that constitute a flow. For example, requests for login, search item, add to cart and place order make up one flow and would be part of one thread group. It’s also where properties for the flow are defined, such as the number of users, ramp-up period, and loop count.

Sampler: HTTP Request

A common example for this category would be an HTTP request. Your request is defined here, which may contain the endpoint, protocol request method, body, parameters, etc.

Config Element: HTTP Header Manager

Most of the time, a set of network requests belonging to the same flow would share a similar set of headers. The Header Manager is where all of these can be added to avoid adding them each time for each request.

Config Element: HTTP Request Defaults

Like the Header Manager, you can keep standard parameters in the Request Defaults, such as protocol, server name or IP, and shared parameters, if any.

Config Element: User-Defined Variables

As the name suggests, any values that you wish to define yourself can be stored here, for example, a random string or random number.

Post Processors: JSON Extractor

The purpose of JSON Extractor is to extract anything from the response data. For example, you may need the authentication token from a login API to pass on to another API. You can use the JSON Extractor to extract it. This will need to be placed within the network request whose data needs to be extracted.

Post Processors: BeanShell PostProcessor

Just like a JSON Extractor can be used to extract JSON data from the response, a PostProcessor can be used to set the value of the extracted JSON path into a named property. We use this further to pass to the next API to send data outside the thread group.

Logic Controllers

Logic Controllers are used to set conditions in your test plan. For example, IF controllers can be used for If-Else conditions, Loop controllers can be used for redundant processes, etc.

Counters

To use loops in logic controllers, you need counters to increment the minimum value up to the maximum for the number of total repetitions required in the thread group. To repeat a network request several times, you must add that request and the counter within the loop controller.

Assertions

With Assertions, you can verify that the outcome meets the desired expectations. There are multiple assertion types, for example, Response or JSON Assertion.

Listeners

The primary purpose of listers is to observe and record the results. At the end of the performance testing, you may view the results from various listeners: Results Tree, Results Table, Summary Report, Graph, etc.

Once you have added these, you will have a structure similar to the following. This is a basic framework to get started with simple performance testing. You can use this to add more as your requirements grow.

Performance Testing Best Practices

To ensure that you get the best out of software performance testing, you can implement some best practices such as:

Define Performance Requirements

Clearly define and document the performance requirements for the system under test. This includes response time, throughput, resource utilization goals, and any constraints or assumptions that may affect performance.

Use Realistic Workloads

Use workloads that closely mimic real-world usage patterns and that stress the system meaningfully. This includes using realistic data sets, network configurations, and hardware configurations.

Automate Testing

Automate the performance testing process as much as possible, including test case execution, data collection, and analysis. This allows for faster and more repeatable performance testing.

Monitor Performance Metrics

Monitor key performance metrics, such as response time, throughput, and resource utilization, during performance testing. This allows you to identify and isolate performance bottlenecks and track progress over time.

Involve Development and Operations Teams

Involve the development and operations teams in the performance testing process. This allows for team collaboration and communication and helps ensure that performance considerations are integrated into the software development process, which is one of the best practices in test automation.

Continuously Test and Monitor Performance

Continuously test and monitor performance throughout the software development lifecycle, not just at the end. This allows for early detection and resolution of performance issues and helps ensure performance is optimized throughout the development lifecycle.

Plan for Scalability

Plan for scalability from the outset of the development process. This includes considering how the system will scale under increasing workloads and designing the system to be scalable.

Validate Performance in Production-Like Environment

Validate performance in a production-like environment, using production data and hardware configurations to ensure that performance meets expectations in real-world conditions.

Wrapping Up – How Important is Performance Testing in Software Testing?

To ensure that your product is fit to be released and functions well under all load types, you must opt for performance testing before it fails upon launch. As such, partnering with a performance testing service can help validate your product’s performance metrics and ensure it is user-friendly. Venture’s QA engineers are proficient in identifying all sorts of bottlenecks in software and websites, helping clients launch software free from all flaws.

FAQs for Software Performance Testing

Software performance testing aims to determine how well the system meets its performance requirements and works under load. It also helps identify bottlenecks and metrics, such as response time under a specific workload.

Performance engineering, on the other hand, encompasses the entire software development lifecycle, making it a broad term. It involves a myriad of activities and processes, such as performance tuning, monitoring, modeling, and capacity planning. These activities are performed throughout all the cycles, from design and development to the operation of a software application.

Therefore, performance testing falls under performance engineering.

Performance testing is a broader term that encompasses various testing types, including load testing. Load testing focuses on evaluating the system under a specific load, while performance testing covers other aspects such as responsiveness, stability, and scalability.

Performance testing is typically integrated into the software development lifecycle as a separate phase or incorporated into existing testing or quality assurance phases. It is usually performed after the development phase and before the deployment phase.