To ensure success in any operation or project, a set of guidelines must be decided for the team to refer to. Similarly, when it comes to software testing, quality assurance teams must choose from a set of software testing methodologies to meet their testing goals and objectives while ensuring the project succeeds.

What is a Software Testing Methodology?

A software testing methodology is a set of procedures, guidelines, and standards to ensure that software products meet the expected quality and performance standards. It is a framework for organizing, planning, and executing the different types of software testing activities.

The importance of software testing methodologies lies in their ability to provide a structured approach to software testing, ensuring that testing is comprehensive, efficient, and effective. They help minimize the risk of defects and errors in the software by identifying them early in the development lifecycle, thereby reducing the cost and time required for defect resolution.

Additionally, a well-defined software testing methodology helps establish clear communication and collaboration between the development team, testing team, and other stakeholders, promoting a quality and continuous improvement culture.

The Major Types of Software Testing Methodologies

Depending on the situation and requirements, an organization can implement any methodology for software testing. Here are three standard methodologies one can choose from.

The Waterfall model

A linear and sequential approach, the Waterfall model divides the testing process into distinct phases, each of which must be completed before proceeding to the next phase. It provides a structured, step-by-step approach that ensures that all essential aspects of testing are considered and addressed. The model also provides clear phases and deliverables, making managing the testing process and tracking progress easier.

This model follows the processes in this sequence:

- Requirements Gathering: The first testing phase involves gathering requirements and defining objectives and acceptance criteria. This helps ensure that the testing process is aligned with the project’s overall goals, which is also one of the best practices in test automation.

- Planning: In this phase, the testing strategy is developed, including the types of tests to be performed, the testing environment, and the resources required. A test plan is also developed, which outlines the scope of the testing, the testing schedule, and the responsibilities of the testing team.

- Design: the test cases and scenarios are designed in this phase. This includes defining the test data, the load profiles, and the expected performance results. The testing environment is also configured, and the test tools are selected.

- Implementation: the tests are executed, and results are recorded. The testing environment is monitored to ensure the test conditions are consistent and the results are accurate.

- Analysis: test results are analyzed to determine if the system meets the objectives. The results are compared against the acceptance criteria, and any performance bottlenecks or issues are identified.

- Reporting: includes the test results, the analysis, and any recommendations for performance improvements. The results are reviewed with the stakeholders, and any necessary changes are made.

- Maintenance: test results are maintained and updated to remain relevant and accurate. This includes updating the test data, load profiles, and expected results as needed.

Pros and Cons of the Waterfall Model

Pros:

- Well-defined phases and deliverables can lead to better planning and control

- Provides a clear understanding of project requirements and scope

- Enables better documentation and traceability

- Works well for small, well-defined projects with minimal changes

- Provides a stable foundation for testing

Cons:

- Limited flexibility to accommodate changes in requirements or priorities

- Lack of customer involvement and feedback

- Late detection and resolution of defects may lead to delays and increased costs

- Tends to focus on following the plan rather than adapting to changing circumstances

- Can result in over-reliance on documentation and a lack of focus on the final product

The Agile model

Unlike the Waterfall Model, the Agile model is flexible and iterative software development. It allows for continuous development and delivery of working software through short iterations, called sprints.

With the Agile Model, testers can get frequent feedback and iteration, enabling the team to quickly make changes and improve the system’s performance. This approach sits well with projects that need frequent and rapid changes due to fluctuations in requirements, such as software performance testing. It is most commonly adopted by testing teams that must work closely with the development team.

The key features of the Agile Model for testing include

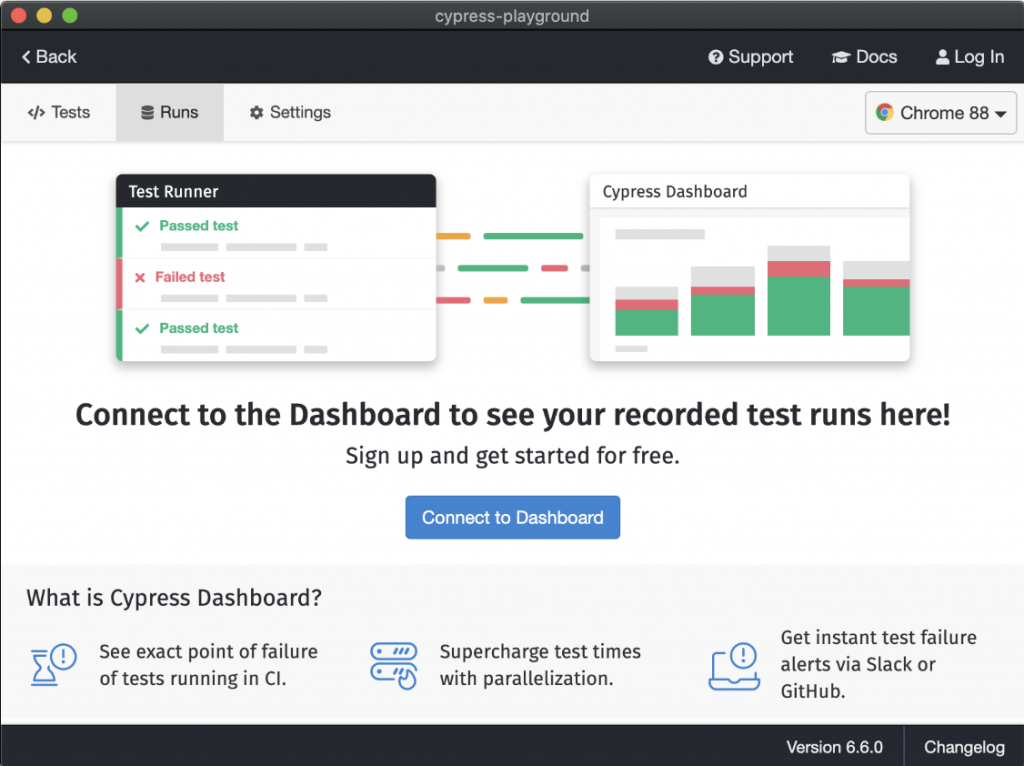

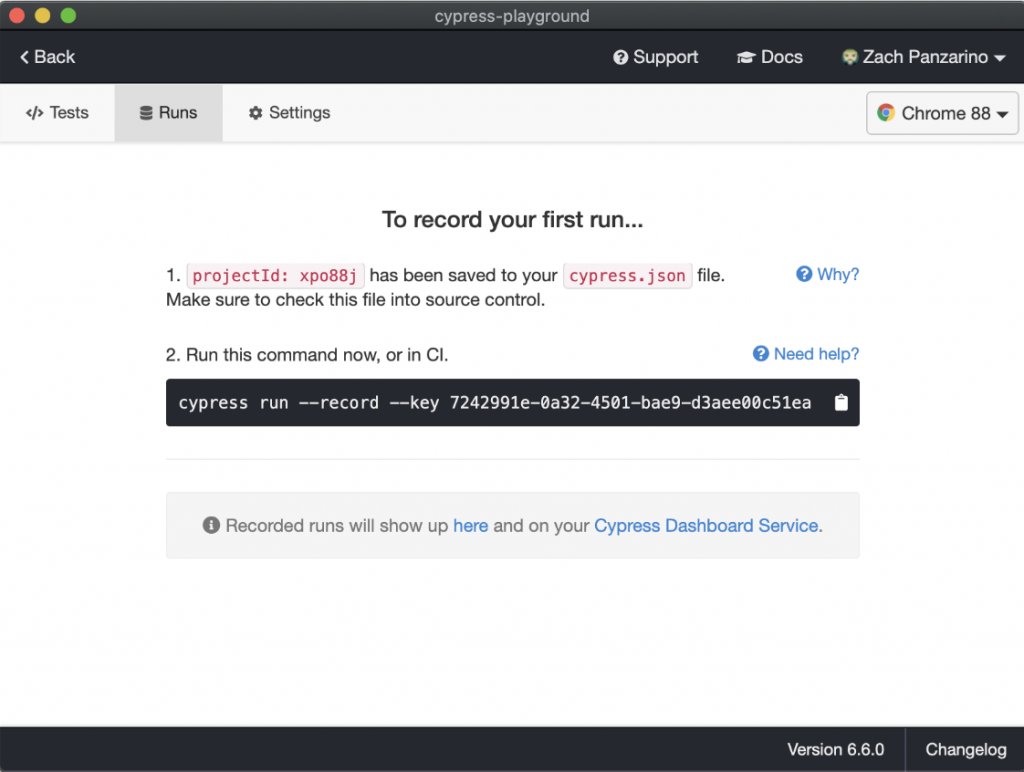

- Continuous Testing: testing is performed throughout the development process rather than at the end. This allows for early detection and resolution of performance issues and reduces the risk of late-stage surprises.

- Collaboration: there is a heavy emphasis on collaboration between the development and testing teams, enabling them to work together to optimize performance. This includes regular meetings, called stand-ups, where the team reviews progress and identifies any issues that need to be addressed.

- Adaptability: since the Agile model is designed to be flexible and adaptable, requirements and priorities change as needed. This helps ensure the testing process aligns with the project’s goals.

- Incremental Delivery: delivery of working software in short iterations is enabled, allowing the testing team to see progress and provide feedback regularly.

Pros and Cons of the Agile Model

Pros:

- Emphasis on customer satisfaction through continuous delivery of working software

- Flexibility to accommodate changes in requirements or priorities

- Promotes teamwork and collaboration among team members

- Allows for early detection and resolution of defects

- Provides regular opportunities for feedback and improvement

Cons:

- Lack of documentation can lead to confusion and misunderstandings

- Requires active participation and involvement from all team members

- May result in incomplete or inadequate testing if not properly managed

- Relies heavily on communication and may lead to misinterpretation if communication is poor

- Can be challenging to implement in larger organizations

The DevOps model

DevOps aims to improve software delivery’s speed, quality, and reliability. As such, the DevOps Model emphasizes collaboration and communication between the development, operations, and testing teams.

In this model, testing is integrated into the overall software development process and is performed continuously throughout the development lifecycle. This assists in the early detection and resolution of performance issues, reducing the risk of late-stage surprises and improving the overall quality of the software.

The key features of the DevOps Model for testing include

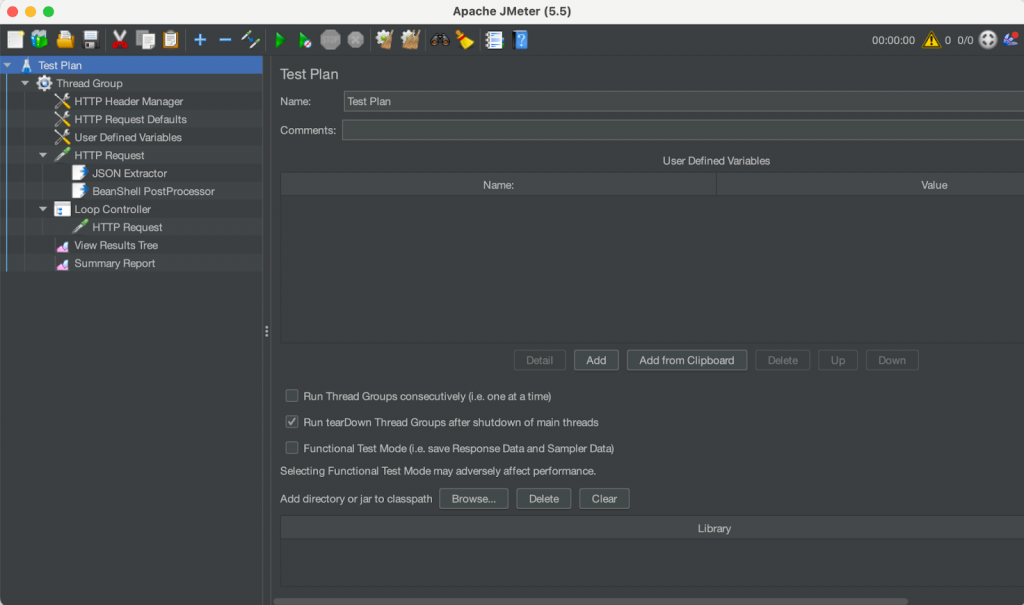

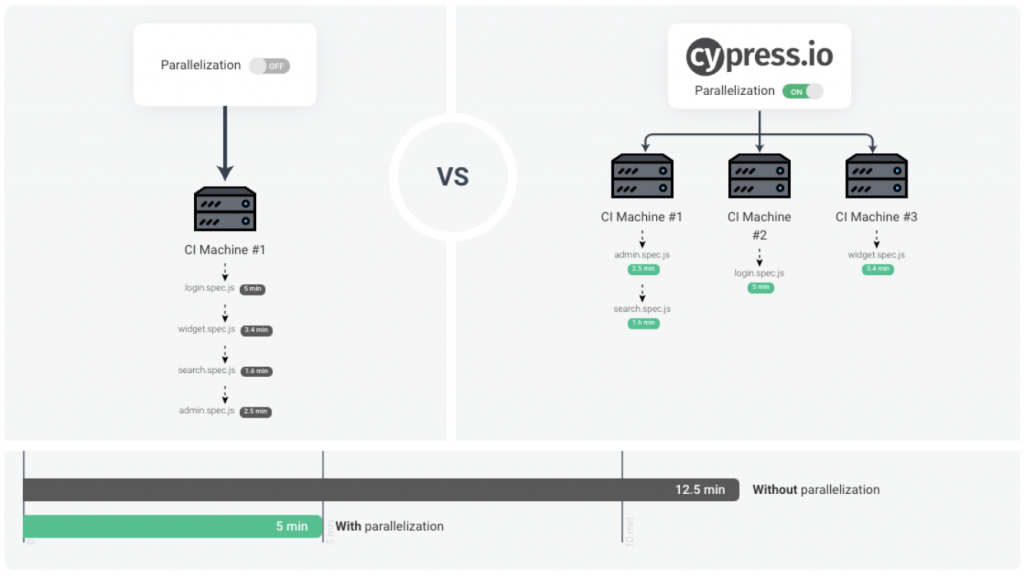

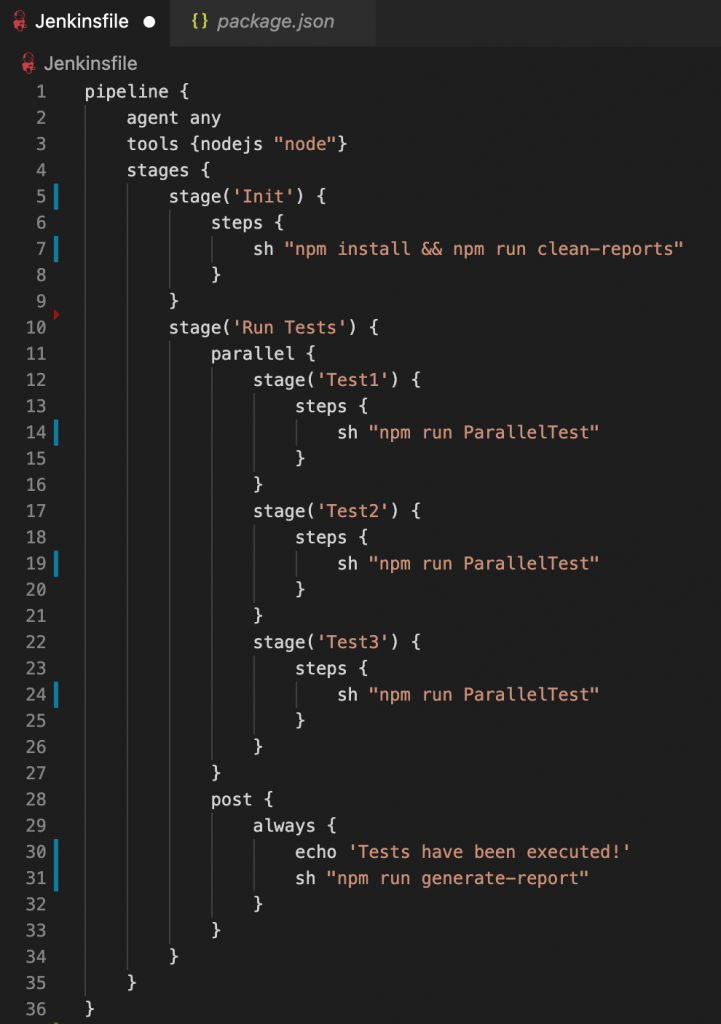

- Continuous Integration: testing is done continuously as code changes are made, especially in parallel testing.

- Automation: this model emphasizes automation, including the automation of testing. This enables the testing team to run tests quickly and repeatedly and to identify and resolve issues rapidly.

- Collaboration: collaboration and constant communication are core components of the DevOps model. This help integrates testing into the software development lifecycle and optimizes performance.

- Continuous Delivery: since the teams are working closely, faster and performance-optimized software delivery is enabled with this model. The operations team can thus easily ensure that the testing objectives are met.

Pros and Cons of the DevOps Model

Pros:

- Continuous integration and delivery lead to faster delivery of software

- Emphasis on automation and collaboration leads to increased efficiency

- Early detection and resolution of defects through continuous testing

- Improved communication and collaboration among teams

- Enables faster response to changing requirements or priorities

Cons:

- Can be challenging to implement in traditional organizations with siloed teams

- Requires a significant investment in tools and infrastructure

- May result in increased workload and pressure on team members

- May lead to over-reliance on automation and a lack of focus on the final product

- Requires a cultural shift towards increased collaboration and continuous improvement

How to Choose the Right Software Testing Methodology?

Selecting the most suitable type of software testing methodology rests on factors such as the nature and timeline of the project as well as the requirements of the client. Moreover, it can also depend on whether the software testers wish to wait for a working model of the system or if they want to input early in the development lifecycle.

For this, it is better to consult a technology solutions provider with expertise in QA, such as VentureDive’s QA services. Besides having in-depth and working knowledge of all aspects of quality assurance, our teams can readily work with different types of testing, making us your reliable partner for QA consulting.

FAQs Related to Software Testing Methodologies

Software testing methodologies are essential because they provide a systematic and structured approach to software testing. They help to ensure that software applications are thoroughly tested for quality, reliability, and functionality. This, in turn, helps to improve the overall quality of software applications and reduce the risk of errors or defects.

Test-driven Development (TDD) is an Agile software development approach involving automated tests before writing code. TDD helps ensure that the code is testable and helps identify and fix issues early in the development process.

Behavior-driven Development (BDD) is a software development methodology emphasizing collaboration and communication between developers, testers, and business stakeholders. BDD focuses on defining the expected behavior of the software application through user stories and then using these stories to drive the development and testing process.